Tech fam, listen up! A research team at Peking University in the Chinese mainland has just revealed a groundbreaking AI computer architecture that delivers a nearly fourfold speed boost for core tasks like the Fourier Transform. Their study, published on Friday (Jan 9) in Nature Electronics, marks a big leap for AI hardware.

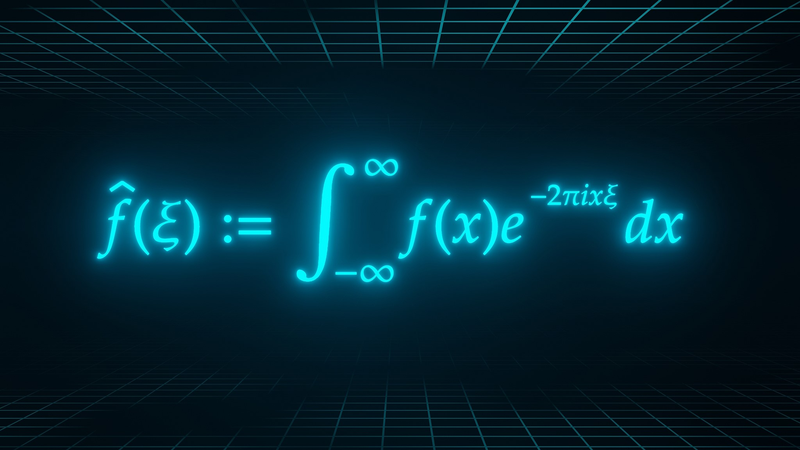

The Fourier Transform is the universal signal translator behind everything from music streaming quality to image filters. By converting complex signals into a frequency view, it powers countless apps in science and engineering. The new design marries two cutting-edge devices into one multi-physics computing system, letting each physical domain—whether it’s electrical current, charge, or light—handle what it does best.

Tao Yaoyu from the Institute for Artificial Intelligence at the same university explains, 'This architecture enables different computing paradigms to operate within their optimal physical domains, improving computational efficiency.' The result? The team ramped up transform speeds from about 130 billion ops/sec to roughly 500 billion ops/sec—without sacrificing accuracy or hiking energy use.

So what’s next? Faster AI models, sharper edge sensing in your gadgets, more efficient autonomous vehicles, and even smoother brain-computer interfaces. For young pros and techies across South and Southeast Asia—from Hyderabad’s startup hubs to Singapore’s innovation labs—this is a signpost for where hardware meets AI magic.

Stay tuned as we track how multi-physics AI computing reshapes everything from on-device apps to global comms systems. Drop your thoughts below on what you’d love to see powered by this speed leap! 😊

Reference(s):

China's new AI computer architecture achieves multi-fold speed leap

cgtn.com